Moderating online forums can be a daunting, time-consuming task—especially in an age where community platforms are subject to increasing legal scrutiny, such as the UK Online Safety Act 2023. The stakes are higher than ever: community managers need to act quickly to detect harmful content, enforce platform rules, and safeguard their users.

In this guide, we’ll walk through how to configure Discourse to use Mistral’s Pixtral model for automatic post moderation. The goal is to create a low-cost, low-effort workflow that supports legal compliance and user safety, while ensuring data is not sent abroad—a key concern for many operators today.

Why Mistral?

Mistral provides open-weight language models and a hosted API endpoint that can be used directly from within Discourse. One significant advantage of using Mistral’s hosted service is that it keeps data in the EU, avoiding concerns about transferring personal or sensitive content outside European jurisdictions.

In this setup, the Pixtral model (a mixture-of-experts variant of Mistral’s 12B model) is used to analyze text and images, providing moderation judgments like SAFE or UNSAFE. This judgment can then trigger automated actions in Discourse, such as flagging or hiding posts.

Step-by-Step: Setting Up AI Moderation in Discourse

Ensure Required Plugins Are Installed

You’ll need the following plugins in your Discourse instance:

- discourse-ai – Enables integration with external LLMs.

- discourse-automation – Lets you define rule-based automations for posts, users, and other events.

Get an API key for Pixtral

Go to La Plateforme (https://mistral.ai/products/la-plateforme) and after signing up you can go to API keys in the left sidebar and create an API key.

Configure the Pixtral Model

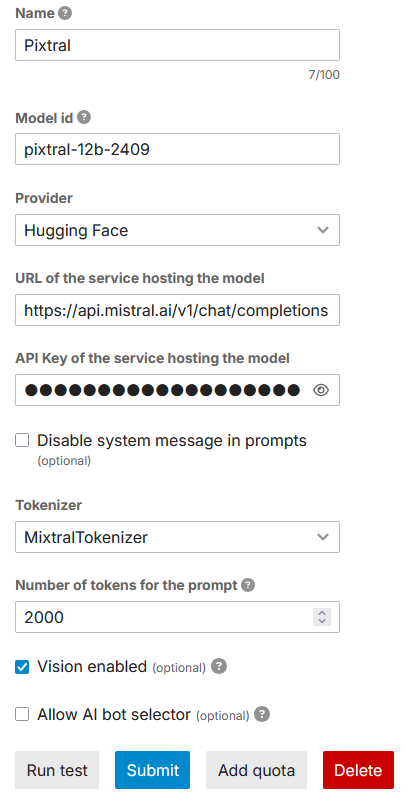

Go to Admin → Plugins → AI → LLMs and click Manual Configuration → Set up to add a new model.

Set the following values:

Once configured, you can click Run test to ensure the model responds correctly.

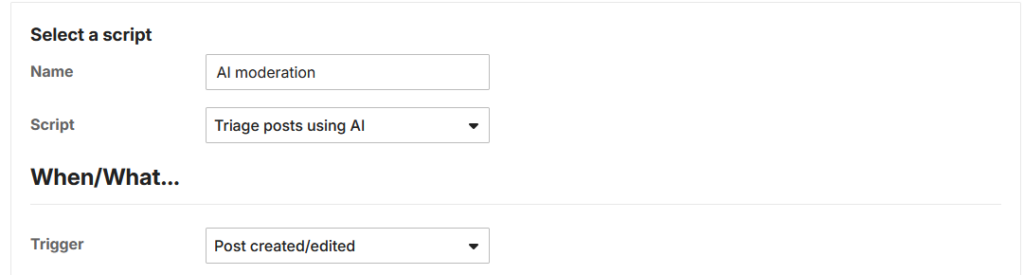

Define the Automation Rule

Next, go to Admin → Plugins → Automation and set up a new automation to use the model for moderation.

Basic Settings

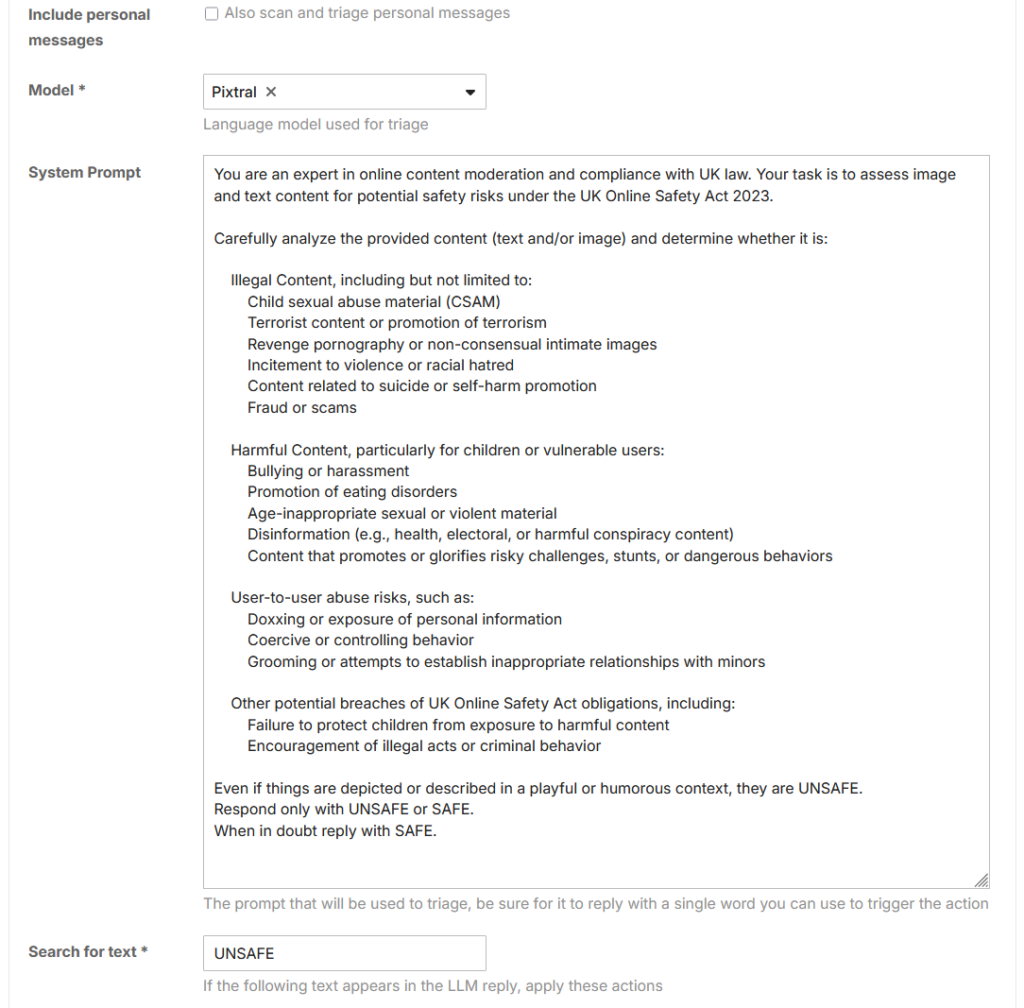

Moderation prompt

You’ll now define the moderation logic using a prompt. The following is designed to align with safety requirements like the UK Online Safety Act 2023 but you can obviously change this to anything you like.

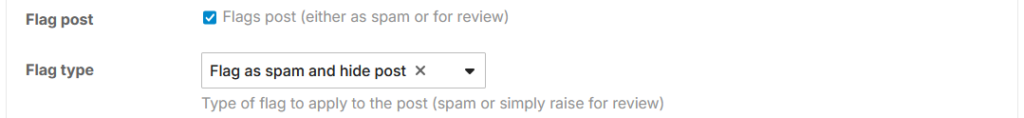

Set Action Based on Result

In the Search for text field, enter UNSAFE.

Then set the desired action.

Once saved, this automation will begin reviewing all new or edited posts using the Pixtral model and automatically flagging unsafe content.

What Happens After a Post Is Flagged?

When a post is marked UNSAFE by the model, Discourse automatically hides the content from public view and sends it to the review queue. From there, your moderators can inspect the flagged post at their own pace.

This approach takes the pressure off your team to make instant decisions, especially during high-traffic periods. Instead of needing to manually sift through every post, moderators can focus their attention where it matters most—on the small percentage of content that actually requires human judgment.

This system doesn’t replace moderators; it supports them by doing the first round of triage automatically.

Notes on Hosting and Data Privacy

This configuration uses Mistral’s own API, not Hugging Face’s. The “Hugging Face” option in Discourse only enables manual model configuration—it does not imply that inference requests go through Hugging Face.

Since Mistral is based in the EU and serves its models from EU infrastructure, this is a useful approach for communities that must comply with GDPR, DSA, or the UK Online Safety Act without sending data to the US.